📖 Engineering Everything with eBPF is available to read online here, or you can download the PDF for offline access.

This is the multi-page printable view of this section. Click here to print.

© 2025 Hamza Megahed – Engineering Everything with eBPF

License: Creative Commons Attribution 4.0 International (CC BY 4.0)

This license lets anyone copy, redistribute, remix, transform, and build upon the material for any purpose—even commercially—so long as they give appropriate credit and indicate if changes were made.

| Part of the project | License |

|---|---|

| Kernel-space eBPF examples | GPL-2.0-or-later |

| User-space loaders, scripts, utilities | Apache 2.0 |

| Book text & figures | CC BY 4.0 (this page) |

Running eBPF programs generally requires root access or the CAP_BPF capability. The kernel verifier guards against crashes, but inefficient or incorrectly attached programs can still degrade performance or disrupt networking. Test all examples on a non-production system first. Neither the author shall be liable for any damages arising from the use of this material.

Running eBPF programs requires elevated privileges and can affect system stability. Follow the examples at your own risk. Neither the author nor the publisher shall be liable for any damages arising from the use of this material.

Hello and welcome. Engineering Everything with eBPF is your friendly guide to eBPF on Linux. eBPF lets you run tiny programs inside the kernel so you can watch what the system is doing, filter network traffic, and even add safety checks—all without changing kernel source code. That sounds powerful, and it is. But it can also feel confusing the first time you see strange section names like SEC("xdp") or long helper calls. Do not worry. Every chapter walks you through one small idea at a time, then shows a real example working on your own machine.

Why this book exists

When I began learning eBPF, I kept bouncing between blog posts and mailing-list threads, piecing things together. I wrote Engineering Everything with eBPF so you do not have to repeat that maze. You will start by loading a five-line program, see the result right away, and gradually build up to practical tools for tracing disk I/O, shaping network traffic, and securing containers.

Plain language, lots of examples

I use short sentences, clear words, and plenty of code. Each new term—map, verifier, tail call—appears next to a tiny program you can copy, run, and explore. After you run the code, the explanation will make more sense. If something still feels cloudy, keep reading; later chapters revisit the idea from a different angle.

Tested environment

All code listings were compiled and executed on Linux kernel 6.12.22, with Clang/LLVM 17 and libbpf 1.5. If you use this kernel (or a newer one) the examples should work exactly as printed. When newer kernels add handy helpers or map types, I point them out and tell you whether you need to adjust your code.

Every example used in this book lives in the public repository

https://github.com/Hamza-Megahed/Engineering-Everything-with-eBPF-Code

What you need

clang, lld, make, and typical build toolsCAP_BPF capability) to load programsHow to read

Skim first, run later. Browse the chapter, copy the program, run it, then come back and read the full explanation. Learning speeds up when you see the output with your own eyes. If a term is still unclear, do not worry; it often becomes obvious after the next example.

By the final chapter you will have a small toolbox of eBPF programs you can adapt to real-world tasks—debugging, performance tuning, or keeping a service safe. Take your time, run the code, and enjoy the process. Everything will click, step by step. Let’s begin our journey into eBPF together.

We’re happy you want to make Engineering Everything with eBPF better.

The steps below keep changes smooth and easy.

Open an issue first

Fork the repo and create a branch

fix-ringbuf-example or add-cgroup-section.Write in the same simple style

```c for C, ```bash for shell).Test what you add

Open a pull request

Fixes #123).Review process

Licensing

char LICENSE[] SEC("license") = "GPL";

"BSD", "MIT", or any SPDX-compatible identifier if you prefer a more permissive license.

Code of Conduct

eBPF (Extended Berkeley Packet Filter) is a revolutionary in-kernel virtual machine that allows developers to execute custom programs directly within the Linux kernel. Unlike traditional approaches, which require recompiling or modifying the kernel, eBPF enables the dynamic injection of code, providing a safe and efficient way to extend kernel functionality without rebooting the system.

eBPF programs are typically written in a restricted subset of C, compiled into bytecode, and then loaded into the kernel using the bpf() system call (which will be explained in more detail later). Once loaded, these programs can be attached to various hooks or events within the kernel, such as system calls, network packets, and tracepoints. The execution of eBPF programs is governed by strict safety checks to prevent them from crashing the kernel or accessing unauthorized memory areas.

In simpler terms, think of eBPF as a sandboxed virtual machine inside the kernel that can observe and modify system behavior safely and efficiently. This technology has opened up new possibilities for performance monitoring, networking enhancements, and security enforcement.

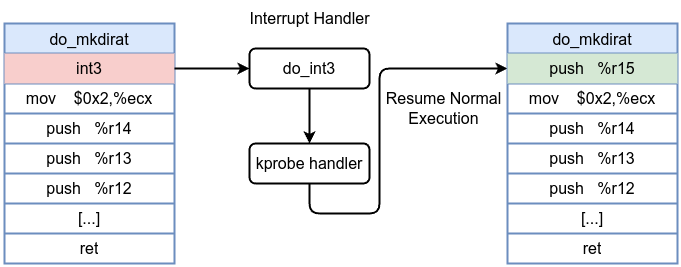

Tracing: eBPF provides powerful tracing capabilities that allow developers to observe and analyze the behavior of the kernel and user-space applications. By attaching eBPF programs to tracepoints, kprobes (kernel probes), and uprobes (user-space probes), you can gather detailed insights into system performance and diagnose issues in real-time (which will be explained later).

open() system call tracepoint and prints the filename each time a file is opened. In cybersecurity, it helps detect unauthorized file access, enabling early threat detection and compliance monitoring.Networking: eBPF enables advanced networking features by allowing custom packet filtering, modification, and routing logic to run within the kernel. This eliminates the need to copy packets to user space, reducing latency and improving performance.

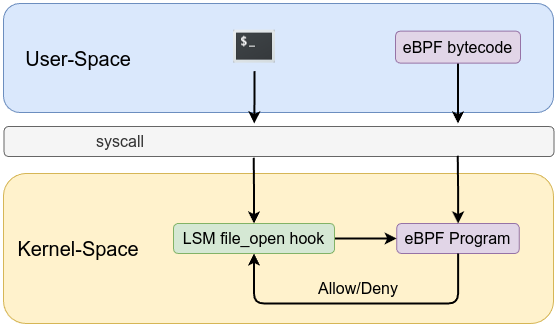

Security: eBPF enhances system security by allowing real-time monitoring and enforcement of security policies. With eBPF, you can detect and respond to security events, such as unauthorized system calls or suspicious network activity.

Observability: eBPF provides deep observability into system behavior by allowing the collection of detailed telemetry data. Unlike traditional logging and metrics, eBPF-based observability can capture low-level events without requiring changes to application code.

bcc (BPF Compiler Collection) and bpftrace allow you to profile CPU usage, memory access, and I/O operations in real-time, helping to identify performance bottlenecks and optimize system performance. In cybersecurity, this can be used to monitor for anomalous system behavior, such as unusual CPU spikes or memory access patterns, which could indicate a malware infection or unauthorized activities.Don’t worry if some of these concepts seem challenging right now; we’ll break them down with clear examples throughout the book. Now, let’s dive into the history of eBPF.

The origins of eBPF (extended Berkeley Packet Filter) trace back to its predecessor, the Berkeley Packet Filter (BPF). BPF was first introduced in 1992 by Steven McCanne and Van Jacobson at the Lawrence Berkeley Laboratory. It was designed to provide a high-performance, user-programmable packet filtering mechanism for network monitoring tools, particularly for capturing packets in real-time.

Prior to BPF, packet capturing was inefficient due to the need for constant context switching between the kernel and user space. The kernel would pass every network packet to user space, where filtering decisions were made. This approach led to significant overhead. BPF addressed this problem by enabling the execution of filtering programs directly within the kernel, allowing only relevant packets to be passed to user space. This dramatically improved performance and efficiency.

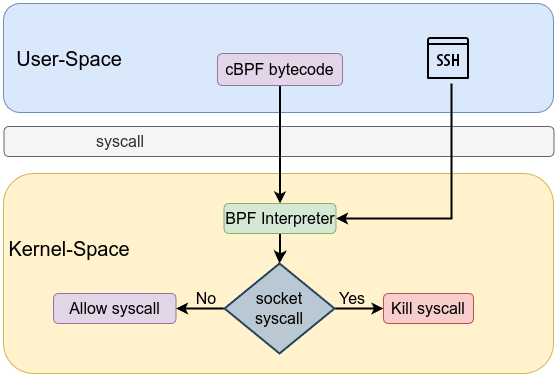

Classic BPF, often referred to as cBPF worked by allowing users to write simple programs to filter network traffic based on specific patterns. These programs were expressed as sequences of low-level instructions that the BPF virtual machine (VM) running in the kernel could interpret and execute. The most notable tool that leveraged cBPF was tcpdump, which allowed network administrators to capture and analyze network packets effectively.

Despite its efficiency, cBPF had several limitations:

BPF was first integrated into the Linux kernel in 1997, starting from version 2.1. This integration allowed kernel-level packet filtering for tools like tcpdump and iptables. Over time, the BPF VM became a reliable mechanism for filtering network traffic efficiently within the kernel space. However, as system and network performance demands grew, the limitations of classic BPF became more clear. The need for a more powerful, flexible, and extensible version of BPF led to the development of eBPF.

In 2014, the Linux kernel version 3.18 introduced extended BPF (eBPF). eBPF was a significant enhancement over classic BPF, providing a modern, flexible, and powerful framework for executing user-defined programs within the kernel. The key improvements introduced by eBPF include:

Since its introduction, eBPF has evolved rapidly, with continuous enhancements to its feature set and performance. Projects like bcc (BPF Compiler Collection), bpftool, and libbpf have made writing and deploying eBPF programs more accessible. eBPF is now used extensively for networking, observability, and security tasks in major projects like Cilium, Falco, and the Kubernetes ecosystem.

The terminology surrounding BPF and eBPF often leads to confusion due to the historical evolution of the technology. Originally, BPF referred exclusively to the Berkeley Packet Filter designed for packet capture. However, with the introduction of eBPF in 2014, the technology evolved far beyond its initial purpose, supporting tasks like tracing, performance monitoring, and security.

Despite these advancements, many tools and kernel APIs continue to use the term BPF even when referring to eBPF functionality. For example, commands like bpftool and the bpf() system call refer to eBPF features while retaining the older name. This overlap in terminology can cause misunderstandings, especially for newcomers who may not be aware of the differences between classic BPF and modern eBPF.

To avoid confusion, it’s helpful to use BPF when referring to the original packet-filtering technology and eBPF when discussing the extended capabilities introduced in the modern framework. This distinction clarifies communication and ensures a better understanding of the technology’s capabilities in the Linux ecosystem.

To illustrate classic BPF in action, consider a simple tcpdump command that captures only TCP traffic on port 80 (HTTP):

tcpdump -i eth0 'ip and tcp port 80'

This command filters packets to capture only those that are TCP-based and are using port 80. The underlying BPF bytecode generated by this command can be viewed using the -d flag:

tcpdump -i eth0 -d 'ip and tcp port 80 tcp port 80'

The output might look like this:

(000) ldh [12]

(001) jeq #0x800 jt 2 jf 12

(002) ldb [23]

(003) jeq #0x6 jt 4 jf 12

(004) ldh [20]

(005) jset #0x1fff jt 12 jf 6

(006) ldxb 4*([14]&0xf)

(007) ldh [x + 14]

(008) jeq #0x50 jt 11 jf 9

(009) ldh [x + 16]

(010) jeq #0x50 jt 11 jf 12

(011) ret #262144

(012) ret #0

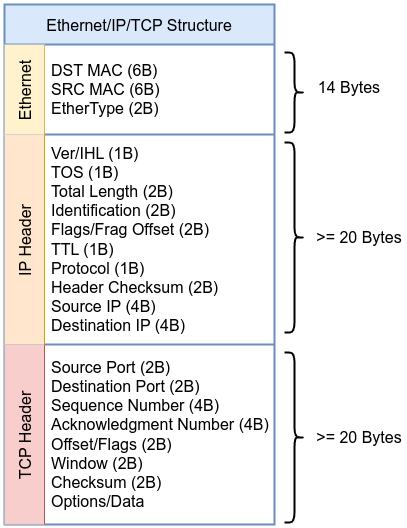

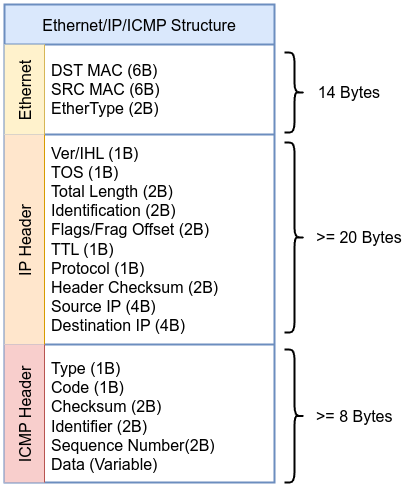

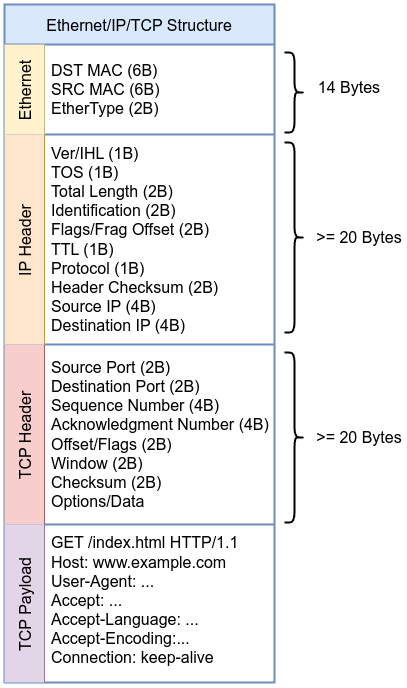

Before diving into the example, take a moment to review the following diagram of the Ethernet, IP, and TCP headers. This will help you visualize how the packet is structured, making it easier to follow along with each step in the BPF bytecode. Keep this scheme in mind as we go through the example to understand how each instruction maps to specific parts of the packet.

Here’s the breakdown of each instruction, including the relevant source code location and snippets from the Linux kernel where these actions are defined or represented.

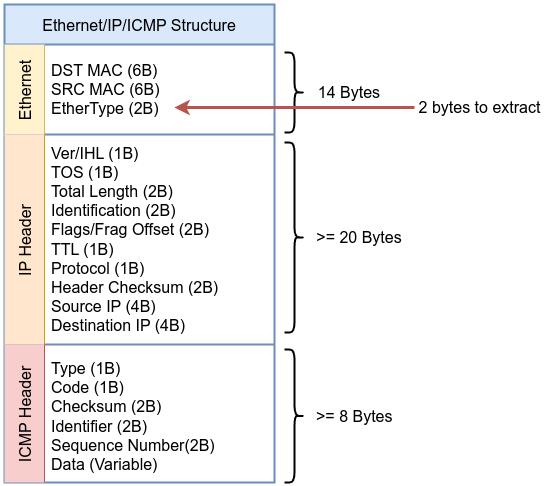

Instruction 000 ldh [12]: Load the 16-bit EtherType field at offset 12 in the packet as described in the kernel source code include/uapi/linux/if_ether.h

#define ETH_HLEN 14 /* Total Ethernet header length */

#define ETH_P_IP 0x0800 /* IPv4 EtherType */

Instruction 001 jeq #0x800 jt 2 jf 12: If the EtherType is 0x800 (IPv4), jump to instruction 2; otherwise, jump to instruction 12.

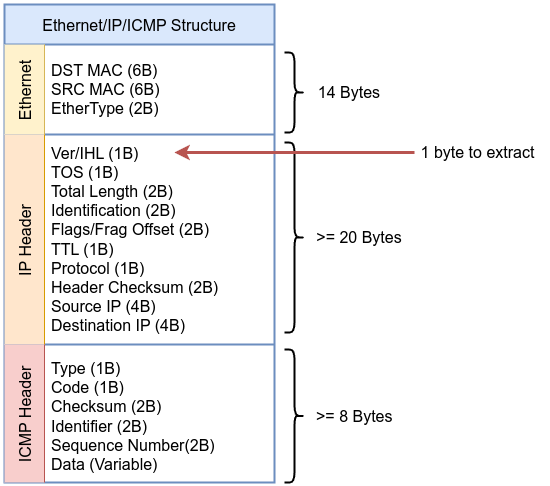

Instruction 002 ldb [23]: Load the 8-bit protocol field at offset 23 in the IP header.

Instruction 003 jeq #0x6 jt 4 jf 12: If the protocol is 6 (TCP), jump to instruction 4; otherwise, jump to instruction 12 as described in the kernel source code include/uapi/linux/in.h

#define IPPROTO_TCP 6 /* Transmission Control Protocol */

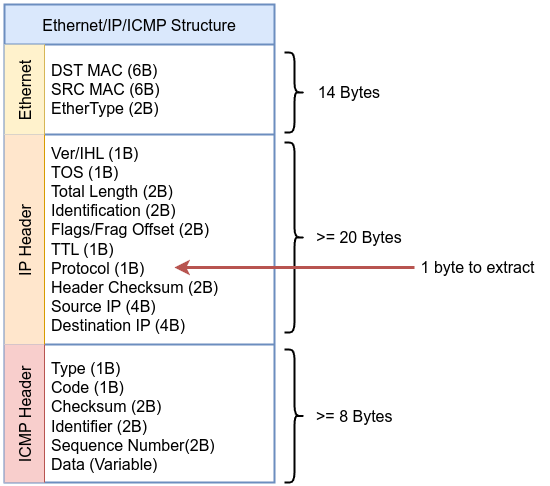

Instruction 004: ldh [20]: Load the 16-bit TCP source port at offset 20.

Instruction 005: jset #0x1fff jt 12 jf 6: Check if the lower 13 bits of the TCP header are non-zero; if true, jump to instruction 12; otherwise, jump to instruction 6.

Instruction 006: ldxb 4*([14]&0xf): Load the value in the TCP header, adjusting by scaling based on the value in the IP header.

Instruction 007: ldh [x + 14]: Load the TCP destination port, located at offset 14 from the start of the packet.

Instruction 008: jeq #0x50 jt 11 jf 9: If the destination port is 80 (0x50 in hexadecimal), jump to instruction 11; otherwise, jump to instruction 9.

Instruction 009: ldh [x + 16]: Load the TCP source port, located at offset 16 from the start of the packet.

Instruction 010: jeq #0x50 jt 11 jf 12: If the source port is 80 (0x50), jump to instruction 11; otherwise, jump to instruction 12.

Instruction 011: ret #262144: If all conditions match, capture the packet (return the packet length).

Instruction 012: ret #0: If the conditions do not match, drop the packet.

These instructions illustrate a classic BPF packet filter that matches IPv4 and TCP traffic on port 80 (HTTP). The constants and structures provided are standard definitions in the Linux kernel. This bytecode demonstrates how classic BPF allows efficient filtering by executing a series of low-level instructions directly in the kernel.

80. Without explicitly defining both ports, the filter would not distinguish between source and destination ports, simplifying the bytecode. These additional checks ensure that packets using port 80 in either direction are captured.

Let’s explore the differences between classic BPF and eBPF to better understand the enhanced capabilities of eBPF.

As mentioned, Berkeley Packet Filter (BPF) was originally developed to filter network packets efficiently. It enabled in-kernel filtering of packets based on simple criteria. However, as the need for more versatile and performant filtering and monitoring grew, extended BPF (eBPF) emerged as a powerful evolution. eBPF transforms BPF into a general-purpose execution engine within the kernel, providing significantly more flexibility and efficiency.

The following 6 points explores the key differences between eBPF and classic BPF, based on Kernel Documentation.

Classic BPF is primarily used for packet filtering. Its primary use case is in network monitoring tools like tcpdump, where it allows users to specify packet filtering rules directly within the kernel.

eBPF, however, has vastly expanded use cases. eBPF is used in:

Classic BPF has a very limited instruction set, primarily designed for basic operations like loading data, performing simple arithmetic, jumping, and returning values.

eBPF, in contrast, expands the instruction set significantly. It introduces new operations like:

Additionally, eBPF supports 64-bit operations (via BPF_ALU64) and atomic operations like BPF_XADD, enabling more sophisticated processing directly in the kernel.

Classic BPF only has two registers (A and X), with limited memory and stack space. The operations on data are simple and restricted to 32-bit width, and these registers are manipulated with specific instructions that limit flexibility.

eBPF greatly improves on this by expanding the number of registers from 2 to 10. eBPF’s calling conventions are designed for high efficiency, utilizing registers (R1-R5) to pass arguments directly into the kernel functions. After the function call, registers R1-R5 are reset, and R0 holds the return value.This allows for more complex operations and handling of more data. Registers in eBPF are also 64-bit wide, which enables direct mapping to hardware registers on modern 64-bit processors. This wider register set and the introduction of a read-only frame pointer (R10) allow eBPF to handle more complex operations like function calls with multiple arguments and results.

Classic BPF is interpreted by the kernel, This means the kernel would read and execute each instruction one by one which adds overhead to the execution of each instruction. This can be a limiting factor when performing more complex operations or filtering on high-throughput systems.

eBPF is designed with Just-In-Time (JIT) compilation in mind, meaning that eBPF programs can be translated into optimized native machine code at runtime. The JIT compiler can convert eBPF bytecode to highly efficient machine instructions, reducing the overhead significantly. This allows eBPF programs to perform at speeds comparable to native code execution, even for complex tasks like system call filtering and network traffic analysis.

Classic BPF uses a simple verifier that checks for program safety by ensuring there are no errors like out-of-bounds memory access.

eBPF, on the other hand, includes a more sophisticated verifier that ensures the program complies to a set of strict rules before execution. The verifier checks for issues like:

This makes eBPF programs much safer, even when they are running with elevated privileges or performing sensitive tasks in the kernel.

Classic BPF: The original BPF format had a program size limit of 4096 instructions, and programs had to be very efficient to avoid exceeding this limit. The limited number of registers and operations meant that programs were usually simple and short.

eBPF: While eBPF still retains a 4096 instruction limit for kernels before 5.2 and one million instructions for kernel starting from 5.2, its expanded instruction set and register size allow for significantly more complex programs. Additionally, the eBPF verifier ensures that programs are safe, loop-free, and deterministic. Furthermore, there are restrictions on the number of arguments that can be passed to kernel functions (currently up to five), although these can be relaxed in future versions of eBPF. Tail calls also allow chaining multiple eBPF programs together, effectively extending the overall execution beyond the single-program instruction limit.

Now, let’s dive into real-world examples to see how eBPF is applied in action and understand its practical benefits.

Netflix relies heavily on maintaining a high level of performance and reliability for its massive streaming infrastructure. With millions of users accessing content simultaneously, identifying performance bottlenecks and ensuring smooth streaming is critical. To address these challenges, Netflix leverages eBPF for advanced performance tracing and debugging.

eBPF allows Netflix engineers to dynamically instrument production systems to gain real-time insights into various kernel and application-level events without impacting system performance. Tools like BPFtrace and bcc (BPF Compiler Collection) help trace everything from CPU utilization to memory allocation and disk I/O latency. eBPF enables the monitoring of these metrics without requiring code modifications or system restarts, providing a seamless debugging experience.

One of the key benefits for Netflix is the ability to analyze issues in real time. When a problem arises, engineers can deploy eBPF-based tracing programs to identify the root cause immediately. This minimizes downtime and ensures rapid resolution. For example, if a particular server experiences unexpected delays, eBPF can quickly pinpoint whether the issue stems from the network stack, disk latency, or a CPU bottleneck.

Moreover, eBPF’s low overhead makes it suitable for use in high-traffic production environments. Unlike traditional tracing tools, which often introduce performance degradation, eBPF maintains efficiency while providing deep insights. This combination of power and performance helps Netflix maintain the quality of service users expect.

Facebook (now Meta) handles billions of user interactions daily, requiring robust and efficient load-balancing mechanisms. To achieve this, Facebook developed Katran, a high-performance, eBPF-based layer 4 load balancer. Katran powers the edge network for Facebook’s backend services, providing scalable and reliable traffic distribution.

Katran uses XDP eBPF to offload load-balancing tasks to the Linux kernel, bypassing some of the traditional limitations of user-space load balancers. By running directly in the kernel, eBPF ensures that packet processing is both fast and efficient, reducing the need for context switches and avoiding bottlenecks.

A key feature of Katran is its ability to dynamically adapt to changes in traffic patterns. eBPF programs enable the load balancer to update its forwarding rules on the fly without requiring restarts. This dynamic updating capability ensures minimal disruption and allows Facebook to handle sudden traffic surges smoothly.

Cloudflare provides security and performance services to millions of websites worldwide, making it a prime target for Distributed Denial of Service (DDoS) attacks. To protect against these attacks, Cloudflare uses XDP eBPF to enhance its DDoS mitigation capabilities.

eBPF enables Cloudflare to monitor network traffic in real time, identifying and filtering out malicious packets before they reach the application layer. By deploying eBPF programs directly in the kernel, Cloudflare can analyze packet headers, track connection states, and enforce filtering rules with minimal latency.

One advantage of using eBPF for DDoS mitigation is its flexibility. eBPF allows Cloudflare to update filtering logic dynamically, adapting to new attack vectors without requiring system downtime or restarts. For example, when a new type of DDoS attack is identified, Cloudflare can deploy an updated eBPF filter to block the attack within seconds.

Moreover, eBPF’s performance efficiency ensures that mitigation measures do not degrade legitimate traffic. Cloudflare can maintain high throughput and low latency even when under attack, providing a seamless experience for end users.

eBPF offers a range of benefits that make it an attractive choice for organizations looking to improve performance, security, and flexibility in their systems. Here are some key reasons to use eBPF:

eBPF programs run directly in the kernel, avoiding the performance penalties associated with user-space operations. With Just-In-Time (JIT) compilation, eBPF code is translated into efficient machine code, ensuring minimal latency and high throughput. This makes eBPF suitable for performance-critical applications like load balancing, packet filtering, and tracing.

eBPF’s flexibility allows it to be used across various domains, including:

This flexibility allows organizations to implement a wide range of solutions without needing different tools for each use case.

eBPF enhances security by enabling real-time policy enforcement and providing deep visibility into system behavior. The eBPF verifier ensures that programs are safe to run, preventing harmful or insecure code from affecting the kernel. This safety mechanism reduces the risk of vulnerabilities and exploits.

One of eBPF’s standout features is its ability to update functionality dynamically. Whether for tracing, load balancing, or security filtering, eBPF programs can be modified and reloaded without rebooting the system. This ensures minimal downtime and enables rapid responses to changing conditions.

eBPF provides powerful tools for real-time observability. By attaching eBPF programs to various kernel and user-space events, organizations can gain detailed insights into system behavior, identify bottlenecks, and troubleshoot issues quickly.

While eBPF programs are highly portable across different Linux distributions, their portability can be affected by variations in kernel versions, architectures, and the available helper functions. The eBPF subsystem in the kernel provides a consistent foundation, but certain kernel updates or changes in architecture may introduce new features or limitations that require modifications to the eBPF programs. Despite these potential variations, eBPF still offers a relatively high degree of portability for cloud-based applications and large-scale environments, allowing organizations to deploy solutions across diverse systems with minimal overhead, provided that compatibility is taken into account.

Now that we’ve explored the general benefits of eBPF, let’s take a closer look at how these advantages specifically apply to the realm of cybersecurity.

eBPF’s capabilities make it a powerful tool for enhancing cybersecurity across multiple layers of infrastructure. By operating within the kernel, eBPF can monitor, analyze, and enforce security policies with low latency and high efficiency. This ability to operate in real time gives organizations a crucial edge in protecting against modern cyber threats.

eBPF enables deep inspection of network traffic and system calls, allowing for real-time detection of anomalous behavior. Organizations can use eBPF to build intrusion detection and prevention systems (IDS/IPS) that identify and block malicious activities such as SQL injection, malware payloads, and privilege escalation attempts. eBPF’s ability to analyze packets at the kernel level ensures minimal overhead while maintaining thorough security checks.

eBPF’s flexibility allows for rapid deployment of filters to block DDoS traffic patterns. When an attack is detected, eBPF programs can be dynamically updated to mitigate new attack vectors in real time. This adaptive capability ensures continuous protection without service disruption.

eBPF can enforce security policies at runtime by monitoring system calls and blocking unauthorized actions. For instance, if a process attempts to access restricted files or execute suspicious operations, eBPF can intervene immediately to block the action and alert administrators. This helps mitigate insider threats and potential exploits.

By attaching eBPF probes to system processes and kernel functions, organizations can monitor for integrity violations. eBPF can detect unauthorized modifications to critical processes (such as injecting code into a running process) or kernel structures, providing an additional layer of defense against cyber attacks.

eBPF can integrate with threat intelligence platforms to apply real-time security updates. For example, new threat indicators can be deployed as eBPF filters to block known malicious IP addresses, domains, or file hashes. This real-time enforcement capability helps organizations stay ahead of evolving threats.

In summary, eBPF’s combination of real-time monitoring, dynamic policy enforcement, and low-latency execution makes it a cornerstone for modern cybersecurity strategies. It could empowers organizations to defend against cyber threats while maintaining performance and system integrity.

Don’t worry if this all feels a bit abstract right now—throughout the next chapters, we’ll dive into specific examples that illustrate how eBPF can be applied to these cybersecurity challenges.

Now that we’ve seen how eBPF can enhance cybersecurity, let’s take a closer look at its architecture, which enables these powerful capabilities.

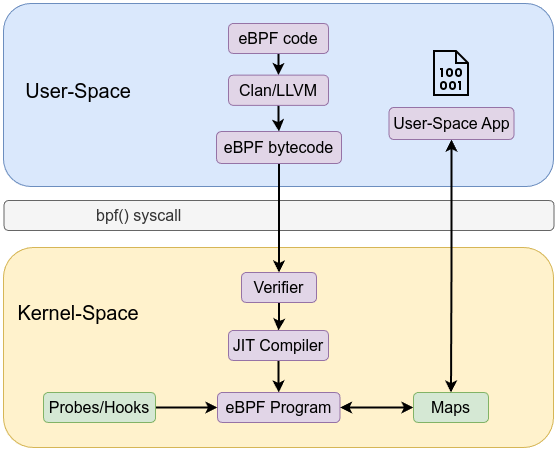

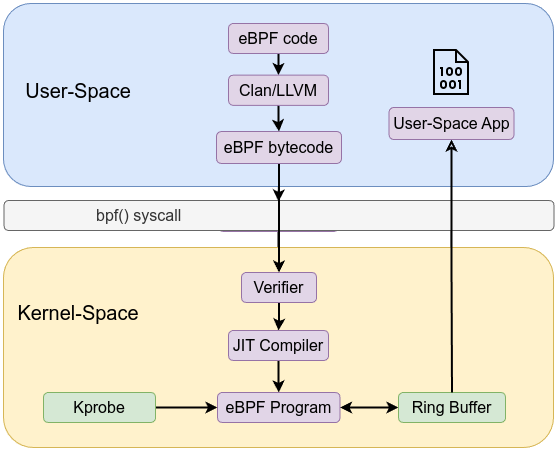

The eBPF architecture is both simple—through its rigid instruction set, maps, and helpers—and sophisticated—via the verifier, JIT, and integration points. At a high level, it is a pipeline taking user-space defined logic through a safety check and into the kernel’s execution environment. At a deep level, each component (verifier, maps, JIT) enforces strict rules that guarantee the kernel’s stability and performance. Through this layered design, eBPF achieves a rare combination: the ability to safely run custom code inside the kernel at near-native speeds while maintaining robust security and reliability guarantees.

Some of the following may not be clear to you yet, as each component will be explained in more detail in the following chapters.

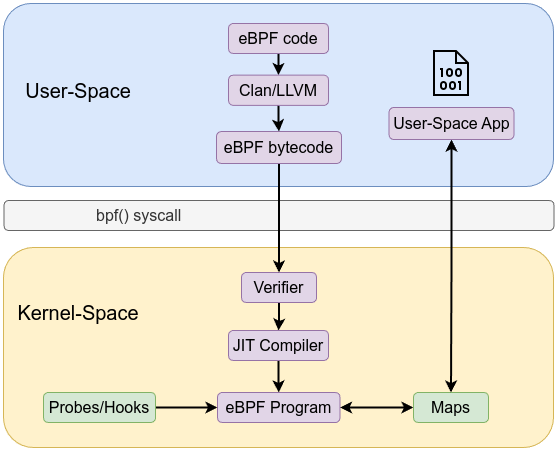

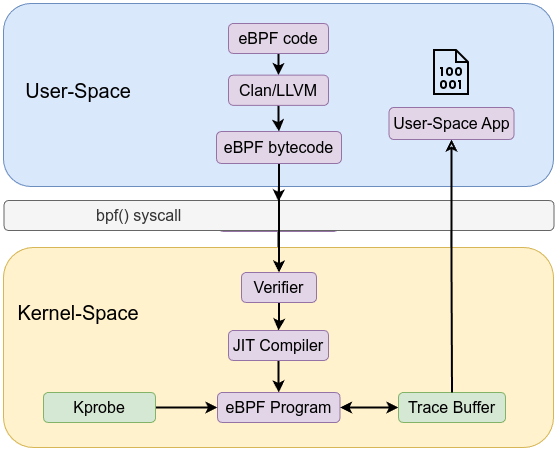

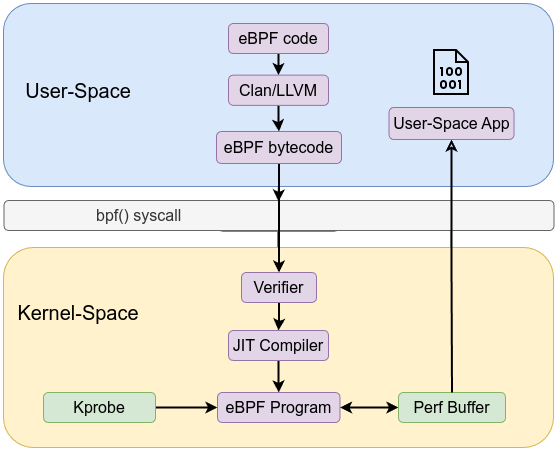

A high-level view of the architecture:

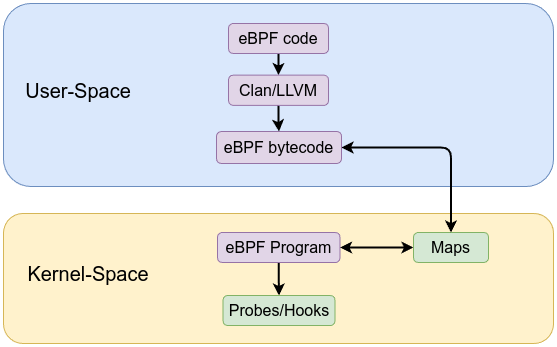

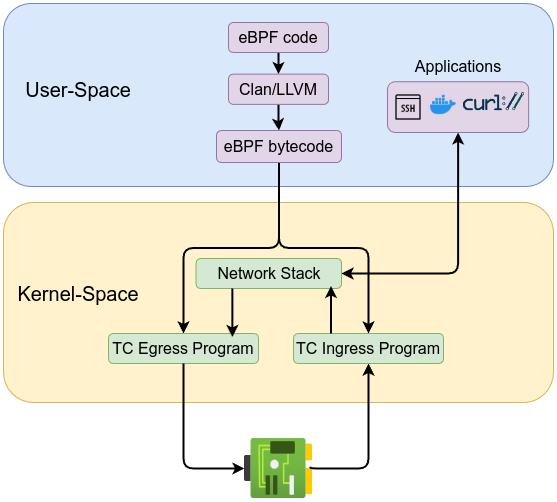

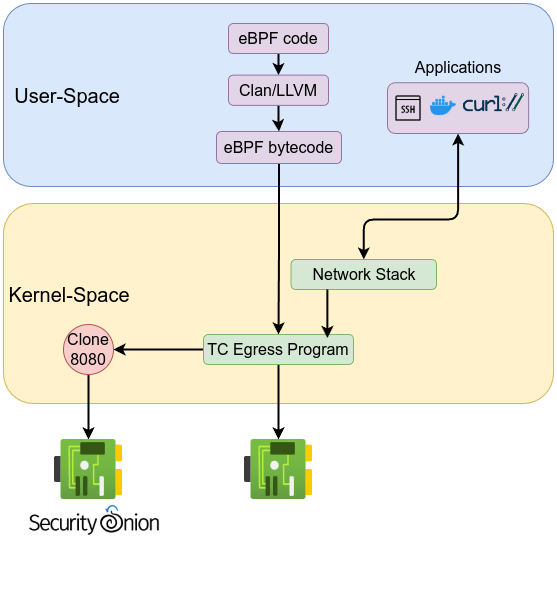

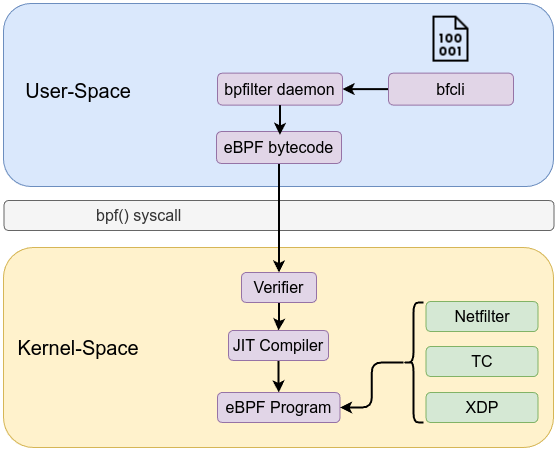

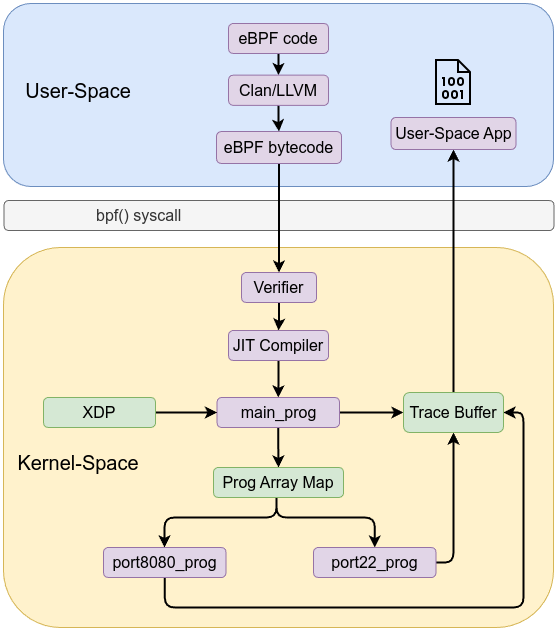

User space tooling compiles and loads these eBPF programs. For example, Clang/LLVM: Compiles C (or other) source code to eBPF bytecode using a special -target bpf flag. The workflow is similar to the following:

bpf() system calls via libbpf or bpftool to load the bytecode into the kernel.This pipeline ensures a controlled, step-by-step process from user space into the kernel.

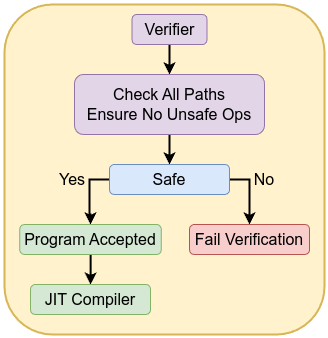

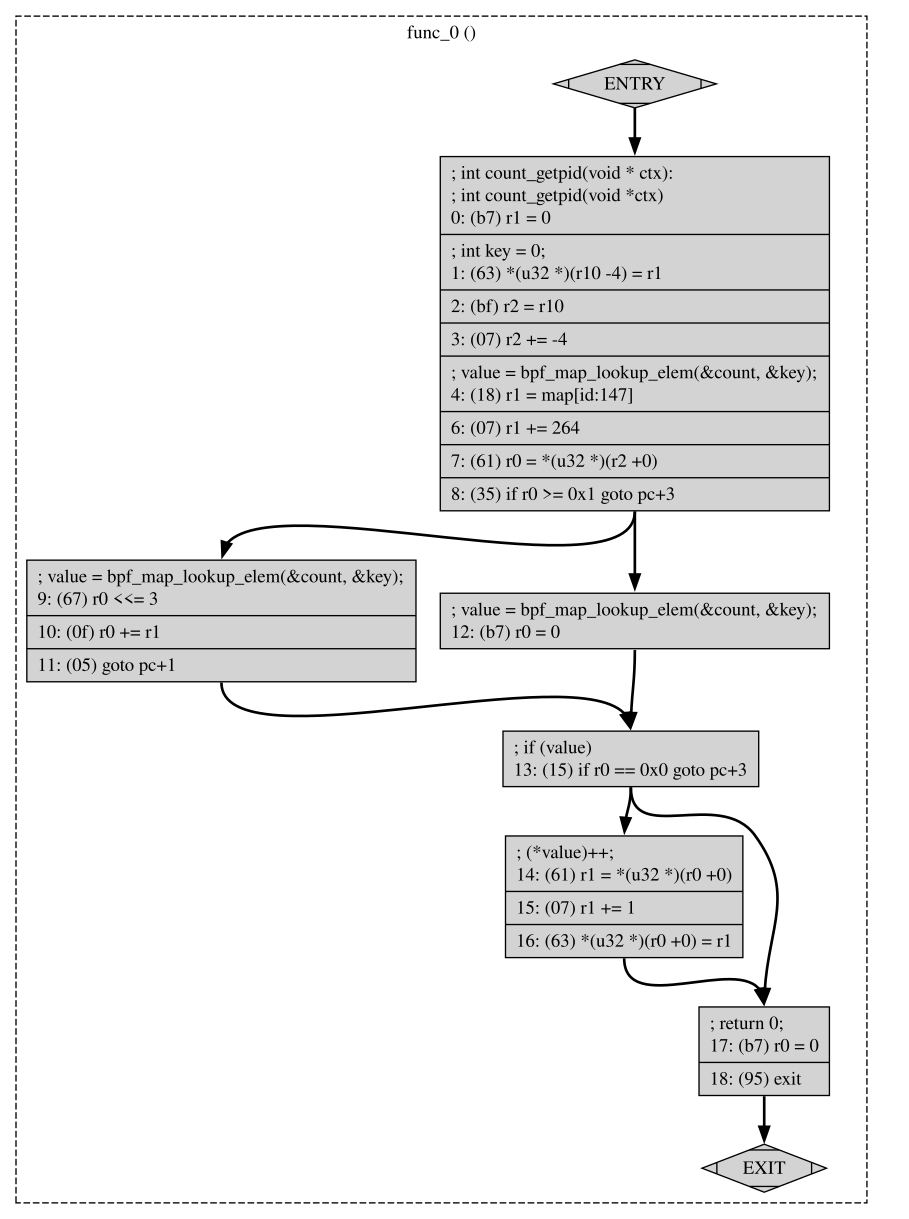

Before an eBPF program runs, it must pass through a static verifier that analyzes every possible execution path. The verifier ensures:

The verifier ensures that once a program is accepted, it cannot violate kernel integrity.

Once verified, eBPF bytecode can be interpreted by an in-kernel virtual machine, or just-in-time compiled into native machine instructions. The JIT compiler:

This makes eBPF suitable for performance-critical tasks, such as packet processing at line rate with XDP (eXpress Data Path).

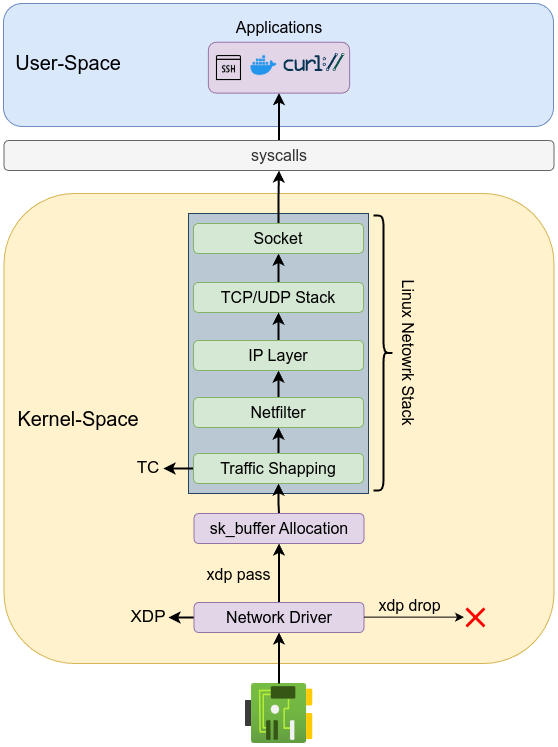

eBPF programs are executed when certain kernel events occur. These events are known as hook points. Common hook points include:

Each hook provides a context—a structured pointer to data relevant to that event (e.g., packet metadata, process info). The program reads fields from this context within verifier-approved bounds, making decisions based on current state.

Maps are the primary mechanism for storing and sharing state between eBPF programs and user space. They enable persistent data storage, counters, histograms, or lookup tables that eBPF code can use at runtime.

The verifier knows the properties of each map and ensures all access is safe (e.g., correct key size, no out-of-bounds reads). This static knowledge allows for safe data sharing between eBPF and user space.

Don’t worry if you don’t fully understand all the details yet—this is completely normal! As we go through applied examples, each step of the architecture will become much clearer, and you’ll be able to see how everything fits together in practice.

The bpf() system call serves as a central mechanism in the Linux kernel for working with the Extended Berkeley Packet Filter (eBPF) subsystem. Originally introduced as a tool to filter packets in the kernel’s networking stack, Berkeley Packet Filters (BPF) allowed user space to define small programs that run efficiently in kernel space. Over time, this concept has evolved significantly from classic BPF (cBPF) to extended BPF (eBPF), which unlocks a far richer set of capabilities. The extended model supports versatile data structures, the ability to attach programs to a variety of kernel subsystems, and the invocation of helper functions that simplify complex operations.

The bpf() system call accepts a command argument determining the exact operation to be performed, and a corresponding attribute structure that passes parameters specific to that operation. Among these operations are commands to load eBPF programs into the kernel, create and manage eBPF maps, attach programs to hooks, and query or manipulate existing eBPF objects. This wide range of functionality makes bpf() a cornerstone of eBPF-based tooling in modern Linux systems.

The kernel ensures eBPF programs cannot crash or destabilize the system through rigorous static analysis at load time. As a result, the bpf() system call provides a secure yet flexible interface for extending kernel functionality.

In the /include/uapi/linux/bpf.h header file, you will find a list of all the commands used in the bpf() syscall. These commands define the actions that can be performed on eBPF objects, such as loading programs, creating maps, attaching programs to kernel events, and more. The commands are organized as part of the enum bpf_cmd, which is used as an argument to specify the desired operation when invoking the bpf() syscall.

Here’s an example of how the enum bpf_cmd is defined in the kernel source:

enum bpf_cmd {

BPF_MAP_CREATE,

BPF_MAP_LOOKUP_ELEM,

BPF_MAP_UPDATE_ELEM,

BPF_MAP_DELETE_ELEM,

BPF_MAP_GET_NEXT_KEY,

BPF_PROG_LOAD,

BPF_OBJ_PIN,

BPF_OBJ_GET,

BPF_PROG_ATTACH,

BPF_PROG_DETACH,

BPF_PROG_TEST_RUN,

BPF_PROG_RUN = BPF_PROG_TEST_RUN,

BPF_PROG_GET_NEXT_ID,

BPF_MAP_GET_NEXT_ID,

BPF_PROG_GET_FD_BY_ID,

BPF_MAP_GET_FD_BY_ID,

BPF_OBJ_GET_INFO_BY_FD,

BPF_PROG_QUERY,

BPF_RAW_TRACEPOINT_OPEN,

BPF_BTF_LOAD,

BPF_BTF_GET_FD_BY_ID,

BPF_TASK_FD_QUERY,

BPF_MAP_LOOKUP_AND_DELETE_ELEM,

BPF_MAP_FREEZE,

BPF_BTF_GET_NEXT_ID,

BPF_MAP_LOOKUP_BATCH,

BPF_MAP_LOOKUP_AND_DELETE_BATCH,

BPF_MAP_UPDATE_BATCH,

BPF_MAP_DELETE_BATCH,

BPF_LINK_CREATE,

BPF_LINK_UPDATE,

BPF_LINK_GET_FD_BY_ID,

BPF_LINK_GET_NEXT_ID,

BPF_ENABLE_STATS,

BPF_ITER_CREATE,

BPF_LINK_DETACH,

BPF_PROG_BIND_MAP,

BPF_TOKEN_CREATE,

__MAX_BPF_CMD,

};

We are not going through the full list but among these commands, one of the most important is bpf_prog_load. This command is used to load an eBPF program into the kernel. By invoking bpf() with the BPF_PROG_LOAD command, the kernel verifies the program’s safety, ensuring that it won’t cause any harm to the system. Upon success, the program is loaded into the kernel, and the system call returns a file descriptor associated with this eBPF program, allowing the program to be attached to various kernel events or subsystems, such as network interfaces, tracepoints, or XDP.

In the kernel’s BPF subsystem, specifically in kernel/bpf/syscall.c, a switch statement is used to dispatch commands defined by the enum bpf_cmd to their corresponding handler functions.

switch (cmd) {

case BPF_MAP_CREATE:

err = map_create(&attr);

break;

case BPF_MAP_LOOKUP_ELEM:

err = map_lookup_elem(&attr);

break;

case BPF_MAP_UPDATE_ELEM:

err = map_update_elem(&attr, uattr);

break;

case BPF_MAP_DELETE_ELEM:

err = map_delete_elem(&attr, uattr);

break;

case BPF_MAP_GET_NEXT_KEY:

err = map_get_next_key(&attr);

break;

case BPF_MAP_FREEZE:

err = map_freeze(&attr);

break;

case BPF_PROG_LOAD:

err = bpf_prog_load(&attr, uattr, size);

break;

[...]

Now, let’s take a closer look at how bpf_prog_load works in practice and how eBPF programs can be loaded into the kernel.

As outlined in man 2 bpf, the bpf_prog_load operation is used to load an eBPF program into the Linux kernel via the bpf() syscall. When successful, this operation returns a new file descriptor associated with the loaded eBPF program. This file descriptor can then be used for operations such as attaching the program to specific kernel events (e.g., networking, tracing), checking its status, or even unloading it when necessary. The BPF_PROG_LOAD operation is invoked through the bpf() syscall to load the eBPF program into the kernel.

char bpf_log_buf[LOG_BUF_SIZE];

int bpf_prog_load(enum bpf_prog_type type,

const struct bpf_insn *insns, int insn_cnt,

const char *license)

{

union bpf_attr attr = {

.prog_type = type,

.insns = ptr_to_u64(insns),

.insn_cnt = insn_cnt,

.license = ptr_to_u64(license),

.log_buf = ptr_to_u64(bpf_log_buf),

.log_size = LOG_BUF_SIZE,

.log_level = 1,

};

return bpf(BPF_PROG_LOAD, &attr, sizeof(attr));

}

Key Parameters:

prog_type: Specifies the type of eBPF program (e.g., BPF_PROG_TYPE_XDP, BPF_PROG_TYPE_KPROBE).insns: The array of eBPF instructions (bytecode) that the program consists of.insn_cnt: The number of instructions in the insns array.license: This attribute specifies the license under which the eBPF program is distributed. It is important for ensuring compatibility with kernel helper functions that are GPL-only. Some eBPF helpers are restricted to being used only in programs that have a GPL-compatible license. Examples of such licenses include “GPL”, “GPL v2”, or “Dual BSD/GPL”. If the program’s license is not compatible with the GPL, it may not be allowed to invoke these specific helper functions.log_buf: A buffer where the kernel stores the verification log if the program fails verification.log_size: The size of the verification log buffer.When a user-space process issues a BPF_PROG_LOAD command, the kernel invokes the bpf_prog_load(&attr, uattr, size) function which is defined in kernel/bpf/syscall.c kernel source code:

static int bpf_prog_load(union bpf_attr *attr, bpfptr_t uattr, u32 uattr_size)

{

enum bpf_prog_type type = attr->prog_type;

struct bpf_prog *prog, *dst_prog = NULL;

struct btf *attach_btf = NULL;

struct bpf_token *token = NULL;

bool bpf_cap;

int err;

char license[128];

[...]

To simplify working with eBPF programs, libbpf provides the bpf_prog_load() function, which abstracts the complexity of interacting with the kernel via the bpf() syscall. This wrapper is located in /tools/lib/bpf/bpf.c and provides additional functionality like retrying failed program loads and setting detailed log options.

int bpf_prog_load(enum bpf_prog_type prog_type,

const char *prog_name, const char *license,

const struct bpf_insn *insns, size_t insn_cnt,

struct bpf_prog_load_opts *opts)

This function simplifies the process of loading eBPF programs by wrapping around the bpf() syscall, handling retries, and providing additional configuration options.

bpf_prog_load_opts structure: This structure provides additional configuration options when loading an eBPF program, as seen below:

struct bpf_prog_load_opts {

size_t sz; // Size of this structure for compatibility

int attempts; // Retry attempts if bpf() returns -EAGAIN

enum bpf_attach_type expected_attach_type; // Expected attachment type

__u32 prog_btf_fd; // BTF file descriptor

__u32 prog_flags; // Program flags

__u32 prog_ifindex; // Interface index for programs like XDP

__u32 kern_version; // Kernel version for compatibility

const int *fd_array; // Array of file descriptors for attachments

const void *func_info; // Function info for BTF

__u32 func_info_cnt; // Function info count

__u32 func_info_rec_size; // Function info record size

const void *line_info; // Line info for BTF

__u32 line_info_cnt; // Line info count

__u32 line_info_rec_size; // Line info record size

__u32 log_level; // Log verbosity for verifier logs

__u32 log_size; // Log buffer size

char *log_buf; // Log buffer

__u32 log_true_size; // Actual log size

__u32 token_fd; // Token file descriptor (optional)

__u32 fd_array_cnt; // Length of fd_array

size_t :0; // Padding for compatibility

};

At the heart of eBPF lies the concept of eBPF maps. Maps are generic, dynamic, kernel-resident data structures accessible from both eBPF programs and user space applications. They allow you to share state and pass information between user space and eBPF code.

The Linux man page (man 2 bpf) states:

eBPF maps are a generic data structure for storage of different data types. Data types are generally treated as binary blobs. A user just specifies the size of the key and the size of the value at map-creation time.

Now, let’s dive into the world of eBPF maps and explore how these powerful data structures are created, accessed, and used within the kernel. By understanding how to interact with maps, you’ll unlock the ability to efficiently store and retrieve data across different eBPF programs.

One of the key design elements that make eBPF so flexible and powerful is the concept of maps. An eBPF map is a data structure residing in the kernel, accessible both by eBPF programs and user space applications. Maps provide a stable way to share state, pass configuration or lookup data, store metrics, and build more complex logic around kernel events.

Unlike traditional kernel data structures, eBPF maps are created, managed, and destroyed via well-defined syscalls and helper functions. They offer a form of persistent kernel memory to eBPF programs, ensuring that data can outlast a single function call or event. This allows administrators and developers to build sophisticated tools for tracing, networking, security, performance monitoring, and more—without modifying or recompiling the kernel.

The Linux kernel defines numerous map types (more than 30 as of this writing), each optimized for different use cases. Some store generic key-value pairs, others store arrays or are used specifically for attaching events, referencing other maps, or implementing special data structures like tries. Choosing the right map type depends on the data and the operations you need to perform.

Before we start with explaining eBPF maps, we need to install either gcc or clang, along with libbpf-dev, to compile our examples. These tools are essential for building and linking the necessary components for eBPF programs. On Debian and Ubuntu, you can install them using the following command:

sudo apt install gcc libbpf-dev

Below, we explore ten commonly used eBPF map types, detailing their conceptual purpose, common use cases, and providing code snippets demonstrating their creation using the bpf_create_map() API.

From the large collection defined in the kernel’s /include/uapi/linux/bpf.h,

enum bpf_map_type {

BPF_MAP_TYPE_UNSPEC,

BPF_MAP_TYPE_HASH,

BPF_MAP_TYPE_ARRAY,

BPF_MAP_TYPE_PROG_ARRAY,

BPF_MAP_TYPE_PERF_EVENT_ARRAY,

BPF_MAP_TYPE_PERCPU_HASH,

BPF_MAP_TYPE_PERCPU_ARRAY,

BPF_MAP_TYPE_STACK_TRACE,

BPF_MAP_TYPE_CGROUP_ARRAY,

BPF_MAP_TYPE_LRU_HASH,

BPF_MAP_TYPE_LRU_PERCPU_HASH,

BPF_MAP_TYPE_LPM_TRIE,

BPF_MAP_TYPE_ARRAY_OF_MAPS,

BPF_MAP_TYPE_HASH_OF_MAPS,

BPF_MAP_TYPE_DEVMAP,

BPF_MAP_TYPE_SOCKMAP,

BPF_MAP_TYPE_CPUMAP,

BPF_MAP_TYPE_XSKMAP,

BPF_MAP_TYPE_SOCKHASH,

BPF_MAP_TYPE_CGROUP_STORAGE_DEPRECATED,

BPF_MAP_TYPE_CGROUP_STORAGE = BPF_MAP_TYPE_CGROUP_STORAGE_DEPRECATED,

BPF_MAP_TYPE_REUSEPORT_SOCKARRAY,

BPF_MAP_TYPE_PERCPU_CGROUP_STORAGE_DEPRECATED,

BPF_MAP_TYPE_PERCPU_CGROUP_STORAGE = BPF_MAP_TYPE_PERCPU_CGROUP_STORAGE_DEPRECATED,

BPF_MAP_TYPE_QUEUE,

BPF_MAP_TYPE_STACK,

BPF_MAP_TYPE_SK_STORAGE,

BPF_MAP_TYPE_DEVMAP_HASH,

BPF_MAP_TYPE_STRUCT_OPS,

BPF_MAP_TYPE_RINGBUF,

BPF_MAP_TYPE_INODE_STORAGE,

BPF_MAP_TYPE_TASK_STORAGE,

BPF_MAP_TYPE_BLOOM_FILTER,

BPF_MAP_TYPE_USER_RINGBUF,

BPF_MAP_TYPE_CGRP_STORAGE,

BPF_MAP_TYPE_ARENA,

__MAX_BPF_MAP_TYPE

};

we’ll focus on these ten map types:

These map types are either widely used or particularly illustrative of eBPF’s capabilities. Together, they represent a broad spectrum of data structures and functionalities.

In eBPF, there are two main components: the eBPF program (which runs in the kernel) and the user-space code (both components will be explained later). It is common to define maps in the eBPF program, but maps are actually created and managed in user-space using the bpf() syscall.

The eBPF program (kernel-side) defines how the map should look and how it will be used by the program. This definition specifies the map’s type, key size, value size, and other parameters. However, the actual creation of the map (allocating memory for it in the kernel and linking it to the eBPF program) occurs in user-space. This process involves invoking the bpf() syscall with the BPF_MAP_CREATE command.

In practice, BTF (BPF Type Format) style maps are the preferred method for defining maps in eBPF programs. Using BTF provides a more flexible, type-safe way to define maps and makes it easier to manage complex data structures. We will explain BTF (BPF Type Format) later in details. When a user-space process issues a BPF_MAP_CREATE command, the kernel invokes the map_create(&attr) function which look like the following:

static int map_create(union bpf_attr *attr)

{

const struct bpf_map_ops *ops;

struct bpf_token *token = NULL;

int numa_node = bpf_map_attr_numa_node(attr);

u32 map_type = attr->map_type;

struct bpf_map *map;

bool token_flag;

int f_flags;

[...]

The bpf_map_create() function is part of the libbpf library, which provides a user-space interface for interacting with eBPF in Linux. Internally, bpf_map_create() sets up the necessary parameters for creating an eBPF map and then makes a call to the bpf() syscall with the BPF_MAP_CREATE command. This function simplifies the process for the user by abstracting away the complexities of directly using the bpf() syscall. It configures the map, including its type, key size, value size, and the number of entries, and once these parameters are prepared, bpf_map_create() invokes the bpf() syscall with the BPF_MAP_CREATE command, instructing the kernel to create the eBPF map. In essence, bpf_map_create() serves as a user-friendly wrapper around the bpf() syscall’s BPF_MAP_CREATE command or map_create function, making it easier for user-space programs to create eBPF maps.

bpf_map_create() wrapper function is defined in the Kernel source code under tools/lib/bpf/bpf.c. The function prototype is as follows:

int bpf_map_create(enum bpf_map_type map_type,

const char *map_name,

__u32 key_size,

__u32 value_size,

__u32 max_entries,

const struct bpf_map_create_opts *opts);

map_type: Specifies the type of the map (e.g., BPF_MAP_TYPE_HASH).map_name: The name of the map.key_size: Size of the key in the map.value_size: Size of the value in the map.max_entries: Maximum number of entries the map can hold.opts: A pointer to the bpf_map_create_opts structure, which contains additional options for map creation (such as flags, BTF information, etc.).The definition for the bpf_map_create_opts structure, part of libbpf, can be found in /tools/lib/bpf/bpf.h

struct bpf_map_create_opts {

size_t sz; /* Size of this struct for forward/backward compatibility */

__u32 btf_fd; /* BTF (BPF Type Format) file descriptor for type information */

__u32 btf_key_type_id; /* BTF key type ID for the map */

__u32 btf_value_type_id; /* BTF value type ID for the map */

__u32 btf_vmlinux_value_type_id; /* BTF vmlinux value type ID for maps */

__u32 inner_map_fd; /* File descriptor for an inner map (for nested maps) */

__u32 map_flags; /* Flags for the map (e.g., read-only, etc.) */

__u64 map_extra; /* Extra space for future expansion or additional settings */

__u32 numa_node; /* NUMA node to assign the map */

__u32 map_ifindex; /* Network interface index for map assignment */

__s32 value_type_btf_obj_fd; /* File descriptor for the BTF object corresponding to the value type */

__u32 token_fd; /* BPF token FD passed in a corresponding command's token_fd field */

size_t :0; /* Reserved for future compatibility (bitfield) */

};

sz: Size of the structure, ensuring forward/backward compatibility.btf_fd, btf_key_type_id, btf_value_type_id, and btf_vmlinux_value_type_id: These fields are related to the BPF Type Format (BTF), which provides type information for the map’s key and value typesinner_map_fd: The file descriptor of an inner map if the map is being used as part of a nested structure.map_flags: Flags that modify the behavior of the map, such as setting the map to read-only or enabling special features (e.g., memory-mapping).map_extra: Reserved for future extensions to the structure or additional configuration.numa_node: Specifies the NUMA node for memory locality when creating the map (used for NUMA-aware systems).map_ifindex: Specifies the network interface index for associating the map with a specific network interface.value_type_btf_obj_fd: A file descriptor pointing to the BTF object representing the map’s value type.token_fd: A token FD for passing file descriptors across different BPF operations.These fields allow for fine-grained control over how the eBPF map behaves, including its memory allocation, access permissions, and type information. Don’t worry about these details now, as some of them will be used shortly when we dive into more examples.

Now that we’ve covered the basics of map creation, let’s start exploring some of the most commonly used eBPF map types.

SEC(".maps")) so that the eBPF program can use it. The “Hash Map User-Space Example snippet”, on the other hand, shows how user-space code (using libbpf and BPF syscalls) can interact with the map—such as creating or obtaining a file descriptor for the map.

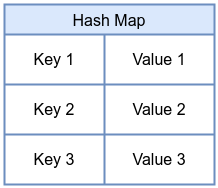

A hash map stores key-value pairs. Each key maps to a corresponding value, and both the key and value have fixed sizes determined at creation time. The hash map provides fast lookups and updates, making it a great choice for data that changes frequently. Common uses include tracking connection states in networking, counting events keyed by process ID or file descriptor, or caching metadata for quick lookups.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} hash_map_example SEC(".maps");

Hash Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_HASH, "hash_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create hash map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_hash_map();

if (fd >= 0) {

printf("Hash map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

We can compile it using gcc -o hash_map hash_map.c -lbpf and -lbpf which tells the compiler to link against the libbpf library, or by using clang -o ring_buff ring_buff.c -lbpf

close() when you’re done using a BPF map file descriptor to ensure proper resource management, prevent resource leaks, and allow the system to release the map’s resources.

CAP_BPF.

To run this program, you need to use the sudo commandsudo ./hash_map

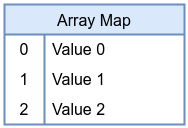

An array map stores a fixed number of elements indexed by integer keys. Unlike a hash map, array keys are not arbitrary—they are simply indexes from 0 to max_entries -1. This simplifies lookups and can provide stable, predictable memory usage. Array maps are perfect for scenarios where you know the exact number of elements you need and require constant-time indexed access. Typical uses include lookup tables, static configuration data, or indexing CPU-related counters by CPU number.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_ARRAY);

__type(key, int);

__type(value, int);

__uint(max_entries, 256);

} array_map_example SEC(".maps");

Array Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_array_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_ARRAY, "array_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

256, // max_entries

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create array map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_array_map();

if (fd >= 0) {

printf("Array map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

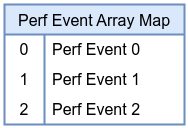

The Perf Event Array map provides a mechanism to redirect perf events (such as hardware counters or software events) into user space using the perf ring buffer infrastructure. By attaching eBPF programs to perf events and using this map, you can efficiently gather performance metrics from the kernel, making it a cornerstone of low-overhead performance monitoring and observability tools.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_PERF_EVENT_ARRAY);

__type(key, int);

__type(value, int);

__uint(max_entries, 64);

} perf_event_array_example SEC(".maps");

max_entries as it will be set automatically to the number of CPUs on your computer by libbpf as per https://nakryiko.com/posts/bpf-ringbuf/.

Pert Event Array Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_perf_event_array_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_PERF_EVENT_ARRAY, "perf_event_array_example",

sizeof(int), // key_size

sizeof(int), // value_size (fd)

64, // max_entries (for events)

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create perf event array map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_perf_event_array_map();

if (fd >= 0) {

printf("perf event array map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

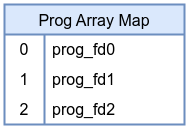

A program array holds references to other eBPF programs, enabling tail calls. Tail calls allow one eBPF program to jump into another without returning, effectively chaining multiple programs into a pipeline. This map type is essential for building modular and dynamic eBPF toolchains that can be reconfigured at runtime without reloading the entire set of programs.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_PROG_ARRAY);

__type(key, int);

__type(value, int);

__uint(max_entries, 32);

} prog_array_example SEC(".maps");

Prog Array Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_prog_array_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_PROG_ARRAY, "prog_array_example",

sizeof(int), // key_size

sizeof(int), // value_size (prog FD)

32, // max_entries

NULL);

if (fd < 0) {

fprintf(stderr, "Failed to create prog array map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_prog_array_map();

if (fd >= 0) {

printf("Prog array map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

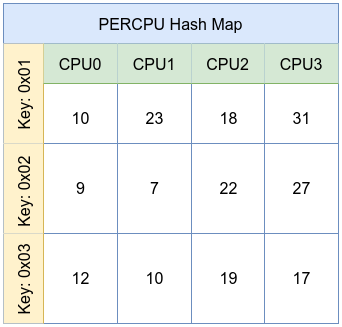

A per-CPU hash map is similar to a standard hash map but stores distinct values for each CPU. This design minimizes lock contention and cache-line ping-ponging, allowing for extremely high-performance counting or state tracking when updates are frequent. Each CPU updates its own version of the value, and user space can aggregate these values later.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_PERCPU_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} percpu_hash_example SEC(".maps");

PERCPU Hash Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_percpu_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_PERCPU_HASH, "percpu_hash_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries

NULL);

if (fd < 0) {

fprintf(stderr, "Failed to create PERCPU hash map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_percpu_hash_map();

if (fd >= 0) {

printf("PERCPU hash map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

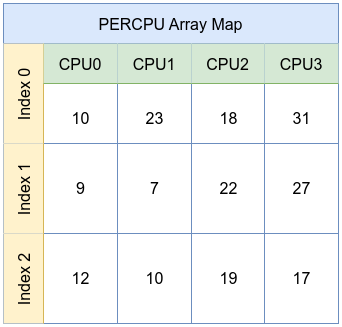

A per-CPU array, like the per-CPU hash, stores distinct copies of array elements for each CPU. This further reduces contention, making it ideal for per-CPU statistics counters, histograms, or other metrics that need to be incremented frequently without facing synchronization overhead.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_PERCPU_ARRAY);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} percpu_array_example SEC(".maps");

PERCPU Array Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_percpu_array_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_PERCPU_ARRAY, "percpu_array_example",

sizeof(int), // key_size

sizeof(int), // value_size

128, // max_entries

NULL);

if (fd < 0) {

fprintf(stderr, "Failed to create PERCPU array map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_percpu_array_map();

if (fd >= 0) {

printf("PERCPU array map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

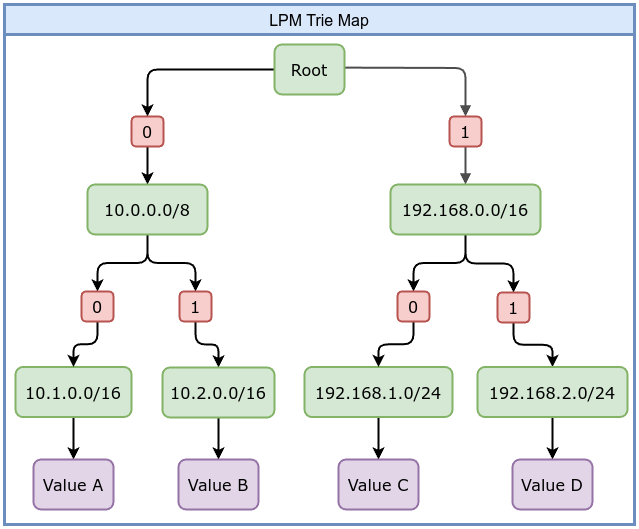

The LPM (Longest Prefix Match) Trie map is designed for prefix-based lookups, commonly used in networking. For example, you might store IP prefixes (like CIDR blocks) and quickly determine which prefix best matches a given IP address. This is useful for routing, firewall rules, or policy decisions based on IP addresses.

BPF_F_NO_PREALLOC flag. This flag prevents the kernel from pre-allocating memory for all entries at map creation time, allowing the map to dynamically allocate memory as needed.

For example, if you create a map that is intended to hold 1,000 entries, the kernel might allocate memory for all 1,000 entries at map creation time. However, in the case of an LPM Trie map, the situation is different. An LPM Trie map is used for prefix-based lookups, such as storing CIDR blocks or IP address prefixes for example 192.168.0.0/24. The number of entries and the amount of memory required for the map can vary depending on the data stored. You can still specify a max_entries value when creating an LPM Trie map, but it is important to note that the kernel will ignore this value. The actual number of entries in an LPM Trie map depends on how many prefixes are inserted, and the map dynamically allocates memory based on the prefixes.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_LPM_TRIE);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} lpm_trie_example SEC(".maps");

LPM Trie Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_lpm_trie_map(void) {

struct bpf_map_create_opts opts = {0};

opts.sz = sizeof(opts);

opts.map_flags = BPF_F_NO_PREALLOC;

int fd = bpf_map_create(BPF_MAP_TYPE_LPM_TRIE, "lpm_trie_example",

8, // key_size

sizeof(long), // value_size

1024, // max_entries and it will ignored

&opts);

if (fd < 0) {

fprintf(stderr, "Failed to create LMP trie map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_lpm_trie_map();

if (fd >= 0) {

printf("LMP trie map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

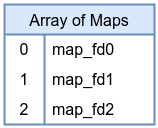

An array-of-maps stores references to other maps. Each element in this array is itself a map FD. This structure allows building hierarchical or modular configurations. For example, you might keep a set of hash maps, each representing a different tenant or set of rules, and select which one to use at runtime by indexing into the array-of-maps. In the following example, we will first create a hash map to serve as the inner map, and then use this hash map as the reference for creating an array of maps.

BTF Map Definition in eBPF Program

struct inner_map {

__uint(type, BPF_MAP_TYPE_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} hash_map_example SEC(".maps");

struct {

__uint(type, BPF_MAP_TYPE_ARRAY_OF_MAPS);

__type(key, int);

__type(value, int);

__uint(max_entries, 4);

__array(values, struct {

__uint(type, BPF_MAP_TYPE_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

});

} array_of_maps_map_example SEC(".maps");

Array of Maps Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_inner_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_HASH, "hash_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create hash map: %s\n", strerror(errno));

}

return fd;

}

int create_array_of_maps(int inner_map_fd) {

struct bpf_map_create_opts opts = {0};

opts.sz = sizeof(opts);

opts.inner_map_fd = inner_map_fd; // Specify the inner map FD

int fd = bpf_map_create(BPF_MAP_TYPE_ARRAY_OF_MAPS, "array_of_maps_map_example",

sizeof(int), // key_size

sizeof(int), // value_size (placeholder for map FD)

4, // max_entries

&opts);

if (fd < 0) {

fprintf(stderr, "Failed to create Array of maps map: %s\n", strerror(errno));

}

return fd;

}

int main() {

// Step 1: Create the inner hash map

int inner_map_fd = create_inner_hash_map();

if (inner_map_fd >= 0) {

printf("Hash map created successfully with fd: %d\n", inner_map_fd);

close(inner_map_fd);

}

// Step 2: Create the array of maps, using the inner map FD

int array_of_maps_fd = create_array_of_maps(inner_map_fd);

if (array_of_maps_fd >= 0) {

printf("Array of maps map created successfully with fd: %d\n", array_of_maps_fd);

close(array_of_maps_fd);

}

if (inner_map_fd >= 0) {

close(inner_map_fd);

}

if (array_of_maps_fd >= 0) {

close(array_of_maps_fd);

}

return 0;

}

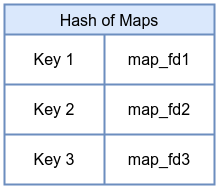

A hash-of-maps extends the concept of array-of-maps to dynamic keying. Instead of indexing by integer, you can use arbitrary keys to select which map is referenced. This allows flexible and dynamic grouping of maps, where user space can manage complex configurations by updating keys and associated map FDs. Again, bpf_create_map() cannot set inner_map_fd, so this example is minimal.

BTF Map Definition in eBPF Program

struct inner_map {

__uint(type, BPF_MAP_TYPE_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

} hash_map_example SEC(".maps");

struct {

__uint(type, BPF_MAP_TYPE_HASH_OF_MAPS);

__type(key, int);

__type(value, int);

__uint(max_entries, 4);

__array(values, struct {

__uint(type, BPF_MAP_TYPE_HASH);

__type(key, int);

__type(value, int);

__uint(max_entries, 1024);

});

} hash_of_maps_map_example SEC(".maps");

Hash of Maps Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_inner_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_HASH, "hash_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create hash map: %s\n", strerror(errno));

}

return fd;

}

int create_hash_of_maps(int inner_map_fd) {

struct bpf_map_create_opts opts = {0};

opts.sz = sizeof(opts);

opts.inner_map_fd = inner_map_fd; // Specify the inner map FD

int fd = bpf_map_create(BPF_MAP_TYPE_HASH_OF_MAPS, "hash_of_maps_map_example",

sizeof(int), // key_size

sizeof(int), // value_size (placeholder for map FD)

4, // max_entries

&opts);

if (fd < 0) {

fprintf(stderr, "Failed to create hash of maps map: %s\n", strerror(errno));

}

return fd;

}

int main() {

// Step 1: Create the inner hash map

int inner_map_fd = create_inner_hash_map();

if (inner_map_fd >= 0) {

printf("Hash map created successfully with fd: %d\n", inner_map_fd);

}

// Step 2: Create the array of maps, using the inner map FD

int array_of_maps_fd = create_hash_of_maps(inner_map_fd);

if (array_of_maps_fd >= 0) {

printf("Array_of_maps map created successfully with fd: %d\n", array_of_maps_fd);

}

if (inner_map_fd >= 0) {

close(inner_map_fd);

}

if (array_of_maps_fd >= 0) {

close(array_of_maps_fd);

}

return 0;

}

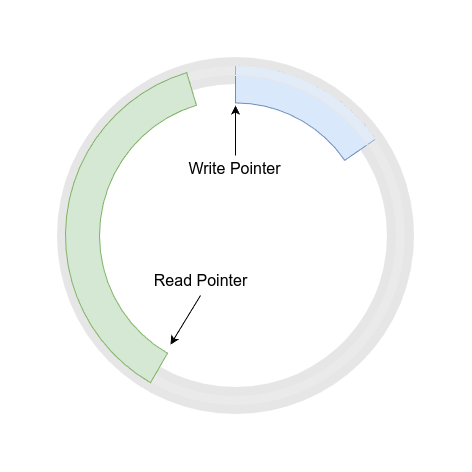

The ring buffer map is a relatively new addition that enables lock-free communication from kernel to user space. Instead of performing lookups or updates for each record, the kernel-side eBPF program writes events into the ring buffer, and user space reads them as a continuous stream. A ring buffer is a circular data structure that uses a continuous block of memory to store data sequentially. When data is added to the buffer and the end is reached, new data wraps around to the beginning, potentially overwriting older data if it hasn’t been read yet.

A typical ring buffer uses two pointers (or “heads”): one for writing and one for reading. The write pointer marks where new data is added, while the read pointer indicates where data should be consumed. This dual-pointer system allows for efficient and concurrent operations, ensuring that the writer doesn’t overwrite data before the reader has processed it. Additionally, the ring buffer is shared across all CPUs, consolidating events from multiple cores into a single stream. This greatly reduces overhead for high-volume event reporting, making it ideal for profiling, tracing, or continuous monitoring tools.

BTF Map Definition in eBPF Program

struct {

__uint(type, BPF_MAP_TYPE_RINGBUF);

__uint(max_entries, 4096); //It must be a power of 2

} ring_buffer_map_example SEC(".maps");

max_entries in BPF_MAP_TYPE_RINGBUF must be a power of 2 such as 4096.

Ring Buffer Map User-Space Example

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_ringbuf_map(void) {

// The size of the ringbuf is given in bytes by max_entries.

int fd = bpf_map_create(BPF_MAP_TYPE_RINGBUF, "ring_buffer_map_example",

0, // key_size = 0 for ringbuf

0, // value_size = 0 for ringbuf

4096,

NULL);

if (fd < 0) {

fprintf(stderr, "Failed to create ring buffer map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_ringbuf_map();

if (fd >= 0) {

printf("Ring buffer map created successfully with fd: %d\n", fd);

close(fd);

}

return 0;

}

By combining these map types with your eBPF programs, you can build sophisticated, runtime-configurable kernel instrumentation, security monitors, network traffic analyzers, and performance profiling tools. Each map type adds a new capability or performance characteristic, allowing developers to craft solutions that were previously challenging or impossible without kernel modifications. This flexibility not only enhances existing solutions but also opens up new possibilities for kernel-level programming. Given the ongoing evolution of eBPF, selecting the right map type for your use case becomes even more important. When doing so, it’s essential to consider factors such as access patterns, data size, performance requirements, and the complexity of your architecture. Here are some things to keep in mind:

BPF_MAP_TYPE_ARRAY. If keys are dynamic or unpredictable, BPF_MAP_TYPE_HASH might be the go-to.BPF_MAP_TYPE_PERCPU_HASH or BPF_MAP_TYPE_PERCPU_ARRAY) can reduce contention. Similarly, BPF_MAP_TYPE_RINGBUF is the perfect fit for high-throughput streaming scenarios.BPF_MAP_TYPE_PROG_ARRAY, BPF_MAP_TYPE_ARRAY_OF_MAPS, or BPF_MAP_TYPE_HASH_OF_MAPS to facilitate more sophisticated architectures.BPF_MAP_TYPE_LPM_TRIE offers a specialized structure optimized for network prefixes and routing logic.BPF_MAP_TYPE_PERF_EVENT_ARRAY ties into the Linux perf subsystem, enabling advanced performance monitoring and event correlation in conjunction with eBPF programs.eBPF map operations are a set of functions defined in the Linux kernel that allow interaction with eBPF maps. These operations enable reading, writing, deleting, and managing data within the maps. The operations are part of the bpf_cmd defined in the kernel source file /include/uapi/linux/bpf.h. Some commonly used operations include:

BPF_MAP_CREATE: Creates a new eBPF map. This operation sets up a map.BPF_MAP_UPDATE_ELEM: Inserts or updates a key-value pair.BPF_MAP_LOOKUP_ELEM: Retrieves the value associated with a given key.BPF_MAP_DELETE_ELEM: Deletes a key-value pair by its key.BPF_MAP_LOOKUP_AND_DELETE_ELEM: Retrieves a value by key and deletes the entry in one step.BPF_MAP_GET_NEXT_KEY: Iterates through the keys in the map.BPF_MAP_LOOKUP_BATCH: Retrieves multiple entries in a single call.BPF_MAP_UPDATE_BATCH: Updates multiple entries at once.BPF_MAP_DELETE_BATCH: Deletes multiple entries in one operation.BPF_MAP_FREEZE: Converts the map into a read-only state.BPF_OBJ_PIN: Pins the map to the BPF filesystem so it persists beyond the process’s lifetime.BPF_OBJ_GET: Retrieves a previously pinned map.These operations allow efficient data sharing between eBPF programs and user-space applications. The bpf() syscall is used to perform these operations, providing a flexible interface for interacting with eBPF maps. Each operation serves a specific purpose.

In this chapter, we have already explained BPF_MAP_CREATE in detail , so we will not cover it again. Instead, we will focus on the rest. We will show how to use them with a simple hash map and explain the code thoroughly.

Some map operations differ between user-space and kernel-space code. In user-space, you interact with eBPF maps using file descriptors and functions that often require an output parameter, Libbpf provides convenient wrappers for these commands which are defined in tools/lib/bpf/bpf.c.

int bpf_map_update_elem(int fd, const void *key, const void *value,

__u64 flags)

{

const size_t attr_sz = offsetofend(union bpf_attr, flags);

union bpf_attr attr;

int ret;

memset(&attr, 0, attr_sz);

attr.map_fd = fd;

attr.key = ptr_to_u64(key);

attr.value = ptr_to_u64(value);

attr.flags = flags;

ret = sys_bpf(BPF_MAP_UPDATE_ELEM, &attr, attr_sz);

return libbpf_err_errno(ret);

}

int bpf_map_lookup_elem(int fd, const void *key, void *value)

{

const size_t attr_sz = offsetofend(union bpf_attr, flags);

union bpf_attr attr;

int ret;

memset(&attr, 0, attr_sz);

attr.map_fd = fd;

attr.key = ptr_to_u64(key);

attr.value = ptr_to_u64(value);

ret = sys_bpf(BPF_MAP_LOOKUP_ELEM, &attr, attr_sz);

return libbpf_err_errno(ret);

}

[...]

whereas in kernel-space (within eBPF programs) perform equivalent operations using built-in helper functions which are defined in kernel/bpf/helpers.c.

BPF_CALL_2(bpf_map_lookup_elem, struct bpf_map *, map, void *, key)

{

WARN_ON_ONCE(!rcu_read_lock_held() && !rcu_read_lock_trace_held() &&

!rcu_read_lock_bh_held());

return (unsigned long) map->ops->map_lookup_elem(map, key);

}

const struct bpf_func_proto bpf_map_lookup_elem_proto = {

.func = bpf_map_lookup_elem,

.gpl_only = false,

.pkt_access = true,

.ret_type = RET_PTR_TO_MAP_VALUE_OR_NULL,

.arg1_type = ARG_CONST_MAP_PTR,

.arg2_type = ARG_PTR_TO_MAP_KEY,

};

BPF_CALL_4(bpf_map_update_elem, struct bpf_map *, map, void *, key,

void *, value, u64, flags)

{

WARN_ON_ONCE(!rcu_read_lock_held() && !rcu_read_lock_trace_held() &&

!rcu_read_lock_bh_held());

return map->ops->map_update_elem(map, key, value, flags);

}

Additionally, some operations—like batch operations and object pinning/getting—are implemented in the kernel but are intended to be invoked from user-space via system calls or libbpf rather than being used directly inside eBPF programs. We’ll explain each operation in both contexts.

BPF_MAP_UPDATE_ELEM command inserts or updates a key-value pair in the map.

The function bpf_map_update_elem is a libbpf wrapper for BPF_MAP_UPDATE_ELEM command, it’s part of the libbpf library, which provides a user-space interface for interacting with eBPF maps in the Linux kernel with prototype as follows:

int bpf_map_update_elem(int fd, const void *key, const void *value, __u64 flags);

It takes a map file descriptor, pointers to the key and value, and a flag indicating how the update should be performed. The flag can be

BPF_NOEXIST to insert only if the key does not exist.BPF_EXIST to update only if the key already exists.BPF_ANY to insert or update unconditionally.

On success, this call returns zero. On failure, it returns -1 and sets errno to indicate the cause of the error. For instance, if you use BPF_NOEXIST but the key already exists, it returns EEXIST. If you use BPF_EXIST but the key does not exist, it returns ENOENT which is No such file or directory.BPF_NOEXIST isn’t supported for array type maps since all keys always exist.

Here is an example that first tries to insert a new element using BPF_NOEXIST and then updates it using BPF_EXIST:

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_HASH, "hash_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries

NULL); // map_flags

if (fd < 0) {

fprintf(stderr, "Failed to create hash map: %s\n", strerror(errno));

}

return fd;

}

int main() {

int fd = create_hash_map();

if (fd >= 0) {

printf("Hash map created successfully with fd: %d\n", fd);

// Insert elements in the hash map

int key = 5;

long value = 100;

if (bpf_map_update_elem(fd, &key, &value, BPF_ANY) == 0) { // BPF_ANY means insert or update

printf("Element inserted or updated successfully: key = %d, value = %ld\n", key, value);

} else {

fprintf(stderr, "Failed to insert or update element: %s\n", strerror(errno));

close(fd); // Close the map before returning

return -1;

}

// Update an element in the hash map

value = 200;

if (bpf_map_update_elem(fd, &key, &value, BPF_EXIST) == 0) {

printf("Element inserted or updated successfully: key = %d, value = %ld\n", key, value);

} else {

fprintf(stderr, "Failed to insert or update element: %s\n", strerror(errno));

close(fd); // Close the map before returning

return -1;

}

// Update an element that doesn't exist in the hash map

key = 4;

value = 300;

if (bpf_map_update_elem(fd, &key, &value, BPF_EXIST) == 0) {

printf("Element inserted or updated successfully: key = %d, value = %ld\n", key, value);

} else {

fprintf(stderr, "Failed to insert or update element: %s\n", strerror(errno));

close(fd); // Close the map before returning

return -1;

}

close(fd);

return 0;

}

return -1;

}

To compile and run the program, use the following command: gcc -o update-ebpf-map update-ebpf-map.c -lbpf.

Then, execute the program with: sudo ./update-ebpf-map.

We first create a map and then insert (5 -> 100) using BPF_ANY, which either inserts or updates unconditionally. Since the map was empty, (5 -> 100) is inserted. Next, we update (5 -> 100) to (5 -> 200) using BPF_EXIST, ensuring that the key must exist beforehand. The operation succeeds, and the value associated with key 5 is now 200.

Then, we try to insert (4 -> 300) using BPF_EXIST, which requires the key to already exist in the map. Since the key 4 does not exist in the map, the operation fails, and the error ENOENT is triggered, resulting in the message “Failed to insert or update element: No such file or directory.”

The output from running the program (sudo ./update-ebpf-map) is as follows:

Hash map created successfully with fd: 3

Element inserted or updated successfully: key = 5, value = 100

Element inserted or updated successfully: key = 5, value = 200

Failed to insert or update element: No such file or directory

int bpf_map_update_elem(void *map, const void *key, const void *value, __u64 flags);

BPF_MAP_LOOKUP_ELEM command is used to retrieve the value associated with a given key. The bpf_map_lookup_elem function is a libbpf wrapper for BPF_MAP_LOOKUP_ELEM command and its prototype is : int bpf_map_lookup_elem(int fd, const void *key, void *value) , so you provide the map’s file descriptor, a pointer to the key you want to look up, and a pointer to a buffer where the value will be stored if the key is found. If the operation succeeds, it returns zero, and the value is copied into the user-provided buffer. If the key does not exist, it returns -1 and sets errno to ENOENT.

Consider a scenario where you have inserted an entry (key=10, value=100) into the map. Looking it up would look like this:

#include <bpf/libbpf.h>

#include <bpf/bpf.h>

#include <errno.h>

#include <unistd.h>

int create_hash_map(void) {

int fd = bpf_map_create(BPF_MAP_TYPE_HASH, "hash_map_example",

sizeof(int), // key_size

sizeof(int), // value_size

1024, // max_entries